October 30, 2021

Code of Ethics for Artificial Intelligence: the choice is up to humans

On October 26, 2021, at the International Forum "Ethics of Artificial Intelligence: the Beginning of Trust", held in Moscow, Russia's largest technology companies adopted a Code of Ethics in the field of artificial intelligence. Artificial intelligence technologies make human life easier, but due to the novelty and rapid spread of these technologies, many people are beginning to doubt whether the machines are really on our side? It is assumed that the Code will increase public confidence in artificial intelligence solutions.

Humans have created many things in the world according to mechanisms learned from nature. The same happened with artificial intelligence. While a human being needs time to study the task, emotions hinder or he just needs to automate some processes to save personal time, artificial intelligence can take over decision-making. What distrust of artificial intelligence is associated with?

Ethics issues

While a machine can be taught some algorithms for performing the simplest actions, everything is much more complicated in matters of ethics, especially in critical situations when the action depends on the result of a quick analysis of several circumstances at once.

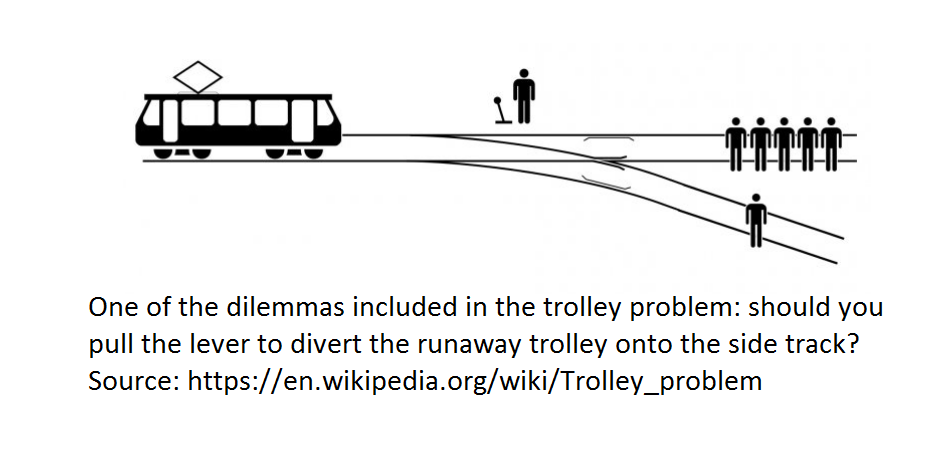

One of the brightest and most famous examples of ethical choice is the trolley problem. In 1967, philosopher Philippa Foot formulated an intellectual experiment about what choice a person makes if he has only two available options. Using the example of a moving tram, which track a person would choose if there is one person on the tram track on one side and five people on the other track. Will the life of one person be less valuable than the lives of five people? Thereafter, many philosophers thought through all the incredible scenarios where a person has to make a choice. For instance, a fast-moving trolley is traveling along the rails with five people tied up. The only way to stop the trolley is to block the way with something big, and only you and a man of large build are around. Will you push the big man onto the way to save the five people? And if you know that this person is a villain who had tied up the unfortunate five people?

One of the brightest and most famous examples of ethical choice is the trolley problem. In 1967, philosopher Philippa Foot formulated an intellectual experiment about what choice a person makes if he has only two available options. Using the example of a moving tram, which track a person would choose if there is one person on the tram track on one side and five people on the other track. Will the life of one person be less valuable than the lives of five people? Thereafter, many philosophers thought through all the incredible scenarios where a person has to make a choice. For instance, a fast-moving trolley is traveling along the rails with five people tied up. The only way to stop the trolley is to block the way with something big, and only you and a man of large build are around. Will you push the big man onto the way to save the five people? And if you know that this person is a villain who had tied up the unfortunate five people?

Now the artificial intelligence is to solve the "trolley problem", but now only with the examples closer to real life. A few years ago, the Massachusetts Institute of Technology (MIT) researchers presented a test, which assumed that anyone can put themselves facing a difficult moral choice, to feel themselves in the place of artificial intelligence - an unmanned machine. Who will die upon a sudden failure of the car's braking system: elderly people who are crossing the road at a red traffic light in the way of the car, or will the car be turned to the other side, where a man and a little boy are crossing the road at the right time? Will the artificial intelligence prefer to crash into a fence that suddenly appeared on the way, then the passengers of the car itself will die: three men and one girl, or will it turn onto the road, where three elderly men and an elderly woman are crossing the road at the wrong time? Then complications are added to the tasks and with the addition of social status and species. For instance: who will survive: dogs or cats, a pregnant woman, a female doctor or others? After which a person may wonder if he really wants to get into a car when, in an emergency, the car will probably choose not his life, but the life of another?

These situations only seem incredible, but self-driving, unmanned cars may actually become the real "trolleys" of modern life. For instance, the situation in 2019, when an unmanned Uber car hit a woman to death who was crossing the road in the wrong place got a lot of publicity around the world . This car accident was the first in human history when a person died when hit by an unmanned vehicle.

These situations only seem incredible, but self-driving, unmanned cars may actually become the real "trolleys" of modern life. For instance, the situation in 2019, when an unmanned Uber car hit a woman to death who was crossing the road in the wrong place got a lot of publicity around the world . This car accident was the first in human history when a person died when hit by an unmanned vehicle.

To trust or not to trust?

It is not the first time humanity to treat technological innovations with caution. At least due to the fact that it's the time of the fourth industrial revolution. We should bear in mind that earlier, humanity already went through the transition from manual labour to machine labour, mastered electricity, made possible the widespread use of computers, mobile phones, and created robots. It is impossible to state the precise time intervals these industrial revolutions had taken place, because countries have been developing at different rates, but now almost the entire world is facing the issues of the fourth industrial revolution. The agenda includes automation of systems, big data, cloud technologies and the use of artificial intelligence technologies.

Artificial intelligence technologies are already making it possible to recognize vehicle license plates, helping in catching criminals, they are tracing and recognizing dubious bank card transactions, they re delivering lunches and parcels, and helping find the best approach to treating patients.

Artificial intelligence is being actively embedded in our life, and sometimes saves lives. The Siri voice assistant with a human speech recognition function helped save its owner from hypothermia. In 2019, a US man lost control of his car while crossing the desert. He was injured, and therefore could not move, but he remembered in the right time that he could use his voice to access the Siri mobile application on his smartphone. So the victim managed to call rescuers.

Before, delegating some tasks to machines sounded just something fantastic and impossible, but now humans can transfer increasingly more tasks to their digital assistants, assuming that they will cope with it even better, especially when it comes to big data.

More recently, in Moscow, after entering personal data into the bank cards, it became possible to pay for travel using Face Pay at each metro station in Moscow. It is difficult to imagine a person who could "scan" a large passenger flow with his glance and accurately determine the balance in the bank cards. This task would clearly be impossible for humans. In addition, residents of the capital of Russia do not have to contact with turnstiles to travel, which is becoming a potentially good solution for metro users during the pandemic. On the other hand, many are beginning to ask questions about the security of their personal data, although the developers assure of the reliability of the cipher.

Artificial intelligence is also associated with people's fears that many professions may become unwanted. Such a case happened at Microsoft Corporation. According to Afisha Daily, the company fired 77 journalists after it decided to replace them with artificial intelligence. And earlier, after the introduction of AI, Sberbank (now Sber) cut 70% of its middle-level managers.

But there are two aspects that give hope for the need for humans resources in the world of the future. First: it still remains difficult to create such a "powerful" artificial intelligence that would repeat human intellect, be able to solve diverse tasks that do not fit into the system of some specific skills of one profession, and solve such a task that was impossible to resolve previously. If this becomes possible, then we will probably have a year or two left to adapt to the changes while they will be trying to fit all these algorithms into a powerful supercomputer. Second: humans create artificial intelligence with the purpose that it helps humans. This means that how far we will go in the field of artificial intelligence development depends only on us, and we are those, who regulate the activities of artificial intelligence and its area of responsibility in accordance with the best options for ourselves.

Code of Ethics

In Russia, the Code of Ethics appeared at the suggestion of the country's President. In December 2020, the President called on the artificial intelligence development community to develop an internal moral code. Nearly a year later, such a code was prepared and presented. It was developed by the Alliance in the field of Artificial Intelligence at the support of the analytical centre under the Government of the Russian Federation, the Ministry of Economic Development of Russia in accordance with the provisions of the National Strategy for the Development of Artificial Intelligence until 2030. A broad expert and public discussion took place before its publication of the code with the participation of developers, representatives of business and government bodies.

The Code of Ethics can be signed by willing companies on the voluntary decision. To date, such companies like Sber, Yandex, VK, Rosatom, Gazprom-Neft and other large business and educational organizations have signed the code. It is assumed that the code will be a guideline for the development of artificial intelligence technologies, which will help provide public confidence in AI, since in accordance with the code - artificial intelligence is on the human side.

While presenting the code, Deputy Prime Minister of the Russian Federation Dmitry Chernyshenko gave an example that according to the results of a survey of residents of the United States, Germany, Australia, Canada and the United Kingdom, 72% of respondents do not trust artificial intelligence; according to the Russian Public Opinion Research Centre (VCIOM), among the Russian residents, the percentage of those who fear artificial intelligence is significantly lower.

"The key question we see is how to make the technology completely reliable and controllable. In Russia, there is a fairly high level of trust in artificial intelligence. According to VCIOM, 48% of Russians trust artificial intelligence. No doubt, we will work to increase the level of trust. But, of course, there are people, who will have no trust in artificial intelligence. Such people will always remain and the task of the state is to make sure that citizens always have the opportunity to manage their affairs without "imposed" services," says Dmitry Chernyshenko.

The Code of Ethics covers aspects of the creation, implementation and use of artificial intelligence technologies at all stages of its life cycle, which are currently not regulated by the legislation and/or acts of technical regulation of the Russian Federation. The main thing in it: the humanistic approach.

Russia was one of the first in the world to formulate five risks and threats that come with the introduction of "digital things" into life - discrimination, loss of privacy, loss of control over AI, causing harm to humans by algorithm errors, use for unacceptable purposes. All these are included in the adopted "Code of Ethics of Artificial Intelligence" as threats to human rights and freedoms.

"In response to the risks, the Code approved the basic principles of implementation of AI - transparency, truthfulness, responsibility, reliability, inclusiveness, impartiality, safety and confidentiality. The principles are cross-border and supranational, since Russia plays an active role in the development of the UNESCO supranational ethical regulation. Another thing is that having adopted the Russian Code of ethics for AI, we will require foreign IT platforms and social networks to comply with Russian legislation. We have the instruments to work with foreign services, although we hope to find points of ethical consensus. I believe that the AI Code of Ethics will help the search for their algorithms," - Dmitry Chernyshenko said.

"In response to the risks, the Code approved the basic principles of implementation of AI - transparency, truthfulness, responsibility, reliability, inclusiveness, impartiality, safety and confidentiality. The principles are cross-border and supranational, since Russia plays an active role in the development of the UNESCO supranational ethical regulation. Another thing is that having adopted the Russian Code of ethics for AI, we will require foreign IT platforms and social networks to comply with Russian legislation. We have the instruments to work with foreign services, although we hope to find points of ethical consensus. I believe that the AI Code of Ethics will help the search for their algorithms," - Dmitry Chernyshenko said.

Companies that have "subscribed" to the code, must be aware of their responsibility when creating artificial intelligence and not use AI technologies for harming human lives and health. According to the code, the responsibility for the consequences of the use of artificial intelligence always rests with humans.

Sources:

https://www.profiz.ru/upl/2021/Кодекс_этики_в_сфере_ИИ_финальный.pdf

Translated by Mukhiddin Ganiev